Establishing a Theoretical Understanding of Machine Learning

"It's kind of like physics in its formative stages—Newton asking what makes the apple fall down," says Sanjeev Arora, Visiting Professor in the School of Mathematics, trying to explain the current scientific excitement about machine learning. "Thousands of years went by before science realized it was even a question worth asking. An analogous question in machine learning is 'What makes a bunch of pixels a picture of a pedestrian?' Machines are approaching human capabilities in such tasks, but we lack basic mathematical understanding of how and why they work."

The core idea of machine learning, according to Arora, involves training a machine to search for patterns in data and improve from experience and interaction. This is very analogous to classic curve-fitting, a mathematical technique known for centuries. Training involves algorithms, the theoretical foundations of which are of great interest in mathematics (see related article). "Machine learning is a very important branch of the theory of computation and computational complexity," says Avi Wigderson, Herbert H. Maass Professor in the School of Mathematics, who heads the Theoretical Computer Science and Discrete Mathematics program. "It is something that needs to be understood and explained because it seems to have enormous power to do certain things—play games, recognize images, predict all sorts of behaviors. There is a really large array of things that these algorithms can do, and we don't understand why or how. Machine learning definitely suits our general attempts at IAS to understand algorithms, and the power and limits of computational devices."

Since 2017, Arora has been leading a three-year program in theoretical machine learning at the Institute for Advanced Study, supported by a $2 million grant from Eric and Wendy Schmidt. This year, Arora's team consists of two postdoctoral Members, Nadav Cohen and Sida Wang, both of whom were involved in the program in 2017. Arora's theoretical machine learning group is specifically focused on fundamental principles related to how algorithms behave in machines, how they learn, and why they are able to make desired predictions and decisions. In spring 2019, they will be joined by Visiting Professor Philippe Rigollet, Associate Professor of Mathematics at the Massachusetts Institute of Technology, and Visitor Richard Zemel, Professor of Computer Science at the University of Toronto and Research Director of the Vector Institute of Artificial Intelligence. Next year, fifteen to twenty Members will join the School's special year program "Optimization, Statistics, and Theoretical Machine Learning" to develop new models, modes of analysis, and novel algorithms.

Why has machine learning become so pervasive in the past decade? According to Arora, this happened due to a symbiosis between three factors: data, hardware, and commercial reward. "Leading tech companies rely on such algorithms," says Arora. "This creates a self-reinforcing phenomenon: good algorithms bring them users, which in turn yields more user data for improving their algorithms, and the resulting rise in profits further lets them invest in better researchers, algorithms, and hardware."

With intense progress and momentum in the field coming from industry, the number of machine learning researchers who are trying to establish theoretical understanding is relatively small. But such study is essential—for reasons beyond its tantalizing connections to questions in mathematics and even physics. "Imagine if we didn't have a theory of aviation and could not predict how airplanes would behave under new conditions," says Cohen, current Member in the theoretical machine learning program. "Soon you will be putting your life in the hands of an algorithm when you are sitting in a self-driving car or being treated in an operating room. We can't yet fully understand or predict the properties of today's machine learning algorithms."

The most successful model of machine learning, known as deep learning, came to dominate the field in 2012, when neural networks, also called deep networks or more broadly referred to as deep learning models, were shown by a team of researchers in Toronto to dramatically outperform existing methods on image recognition. Since then, deep learning has led to rapid industry-driven advances in artificial intelligence, such as self-driving cars, translation systems, medical image analysis, and virtual assistants. When an artificially intelligent player developed by Google DeepMind beat Lee Sedol, an eighteen-time world champion, in the ancient Chinese strategy game Go in 2016, the machine utilized inventive strategies not foreseen or utilized by humans in the more than two millennia during which the game has been played. "Human intelligence and machine intelligences will very likely turn out to be very different," says Arora, "kind of like how jet airplanes are very different from birds."

"Deep" in this context refers to the fact that instead of going directly from the input to the output, several processing levels are involved until the output is achieved. Training such models involves algorithms known as gradient descent or back propagation, which enable the parameters to be tuned—think of a refraction eye exam to determine a prescription lens—in such a way that the output gets increasingly closer to the desired outcome. This model is inspired loosely by interconnected networks of neurons in the brain, although the brain's exact workings are still unknown.

The sheer size of deep learning models, which outstrips human comprehension, raises important computational and statistical questions, as well as how to comprehend what the deep model is doing. The group at the IAS is focusing on such issues. The year-long special program in 2019–20 will focus on the mathematical underpinnings of artificial intelligence, including machine learning theory, optimization (convex and nonconvex), statistics, and graph theoretic algorithms, as well as neighboring fields such as big data algorithms, computer vision, natural language processing, neuroscience, and biology.

Arora and Behnam Neyshabur, a Member in the theoretical machine learning program in 2017–18, have been studying questions related to generalization, the phenomenon whereby the machine, after it has been trained with enough samples—images of cats versus dogs, for example—acquires the ability to produce the correct answer even for samples it has never seen before, as long as they are similar enough to the training examples. This is surprising because the number of tunable parameters in today's models vastly exceeds the number of available training samples. Classically, such situations with huge models were believed to be susceptible to overfitting—when models fit themselves too closely to the peculiarities of training samples and then fail to predict well on new unseen data. Arora and Neyshabur's coauthored paper from summer 2018 shows that the "effective size" of today's trained deep nets may be smaller than the number of tunable parameters in the model. Their theory pinpoints ways in which subparts of deep nets are redundant and allows simple and provable compression of the model. This goes some way to explaining the absence of overfitting.

Another major ongoing activity in the IAS group is understanding how machine learning models can develop understanding of language. Arora and his Members have designed new techniques for capturing the meaning of sentences. "Our sentence embeddings involve simpler, more elementary techniques that compete with deep net algorithms," says Arora. "These alternatives to deep learning are much more efficient, understandable, and transparent."

IAS Member Sida Wang is interested in new models of interactive learning, specifically those where computer systems learn to respond to commands in human language. These differ from existing systems such as Siri in that the human speaker may converse in a completely different style or language than the ones involved in training the system. In Wang's models of interactive learning, the behavior of machines and humans adapts to feedback received by each. The formal treatment is borrowed from game theory: the interaction is modeled as a cooperative game where the system and the user cooperate toward their shared goal of mutual comprehension. Wang has working prototypes of such systems, including one that creates a virtual world in which a user can give commands, such as "stack three blue blocks," to which the system learns to respond. He has also created a talking spreadsheet, which likewise learns to understand simple directions, such as "add vertical grids," from a few rounds of interaction. "The systems have no in-built rules about natural languages," says Wang. "I developed a learning algorithm and invited people on the internet—non-experts—to use the program and thus train it."

The program at the Institute taps into the strengths of the theoretical machine learning program that Arora has established with colleagues at nearby Princeton University where he is Charles C. Fitzmorris Professor of Computer Science. Arora has recruited machine learning postdocs to join the IAS community as Members where they have access to and interact with fellow leaders in mathematics, physics, cosmology, systems biology, social science, and the humanities.

"Being at the Institute was very different. It was really peaceful and allowed me to think about new ideas without being interrupted or stressed out about other things," says Neyshabur. "Physics is one of the closest areas to machine learning. Given the complexity of the models involved, we need to approach the problems the way physicists do. It was very useful to have conversations while at IAS with physicists who are also working on machine learning problems."

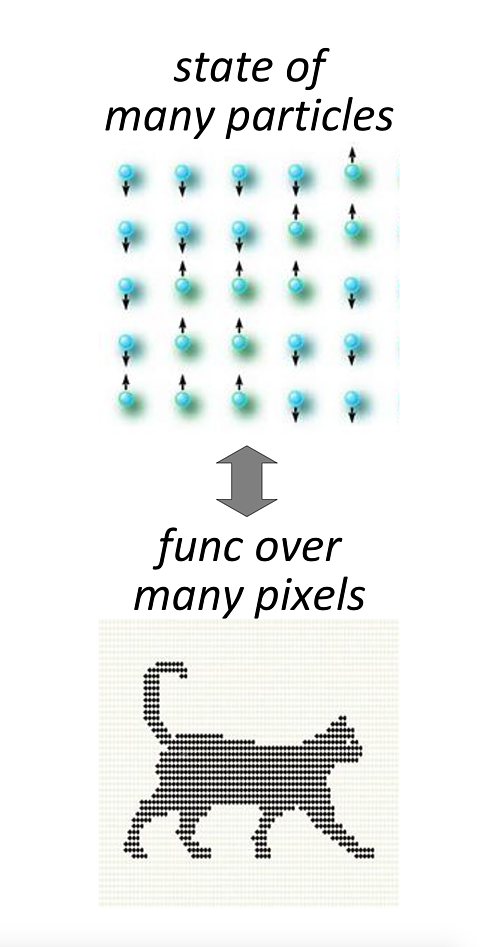

Member Nadav Cohen is interested in exploring connections between machine learning and quantum physics. He recently published a paper with colleagues on links between deep learning and quantum entanglement with implications for network design. One of the main contributions was to show that tensor networks, which are a common computational tool in quantum physics, are equivalent to deep learning architectures. The equivalence paved a way for using well-established concepts and tools from quantum physics for theoretical study of deep learning. Specifically, Cohen and colleagues employed the concept of quantum entanglement for studying dependencies of deep network models between input elements (e.g., pixels of an image) and applied quantum min-cut/max-flow results for analyzing the effect of a deep network architecture on the entanglement it supports, leading to new practical guidelines for deep network design.

Recommended Viewing: As part of the 2017–18 activities, Sanjeev Arora organized a public lecture series at IAS focused on theoretical machine learning, which included talks by him and Richard Zemel, Visitor in the School and a Professor of Computer Science at the University of Toronto where he serves as Research Director of the Vector Institute of Artificial Intelligence; Yann LeCun, Facebook’s chief artificial intelligence scientist and founder of the Facebook AI Research lab; and Christopher Manning, Thomas M. Siebel Professor in Machine Learning and Professor of Linguistics and of Computer Science at Stanford University. Videos are available here.

"I consider this a once-in-a-lifetime opportunity," says Cohen of his time at the Institute. "The Institute is so intimate, I am able to interact extensively with other mathematicians and physicists, which would not be possible in a typical computer science department or corporate lab. I am able to meet physicists, exchange ideas, have lunch with them. I have a lot of interest in what they do, and there is a lot of interest in what I do."

One such physicist is Guy Gur-Ari, a Member in the School of Natural Sciences in 2017–18 who was working on research related to quantum field theory, quantum gravity, and black hole physics. In April, he gave a talk at IAS, "Good and Bad Analogies of Physics in Deep Learning," on the extent that deep learning models are similar to complex physical systems. He is now a research scientist working in machine learning at Google. Similarly, Dan Roberts, a Member in the School of Natural Sciences in 2016–18, was working on quantum information theory, black holes, and how the laws of physics are related to fundamental limits of computation before he joined Facebook AI Research as a research scientist in February.

In 2018–19, several physicists are focusing their research in part on machine learning, primarily applying machine learning to physics problems: Kyle Cranmer is using machine learning techniques in his research in high-energy physics; Brant Robertson is applying machine learning and computational methodologies to large astronomical data sets; and Yuan-Sen Ting is using theoretical modeling, observational astronomy, and machine learning in his research on the evolution of the Milky Way.

Given artificial intelligence's resources, reach, and ability to exceed the performance of any individual, its societal implications are immense and unpredictable. "I think machine learning is most certainly going to cause great disruption in our society, but we are not good at recognizing the full impact," says Arora. "Five years ago, everyone thought that Twitter and Facebook were going to lead to the end of tyranny. How naïve were we?"

As corporations and governments centralize enormous amounts of data that can be processed at very high speeds by algorithms that humans don't fully comprehend, Wigderson points to the possible fault lines involved.

"There are issues of privacy and of fairness, because these networks will be used to predict, for example, if someone is likely to pay off their bank loan, or to commit another crime," says Wigderson. "Machine learning is going to be applied everywhere, and we need to find out its limits, its fragility points. At this stage deep nets is a phenomenon that we observe and experiment with (as scientists), but we don't quite understand. It is a phenomenon in search of a theory, and this is a huge scientific and societal challenge." ––Kelly Devine Thomas, Editorial Director