An Artificially Created Universe

Starting in late 1945, John von Neumann, Professor in the School of Mathematics, and a group of engineers worked at the Institute to design, build, and program an electronic digital computer—the physical realization of Alan Turing’s Universal Machine, theoretically conceived in 1936. As George Dyson writes, the stored-program computer broke the distinction between numbers that mean things and numbers that do things.

There are two kinds of creation myths: those where life arises out of the mud, and those where life falls from the sky. In this creation myth, computers arose from the mud, and code fell from the sky.

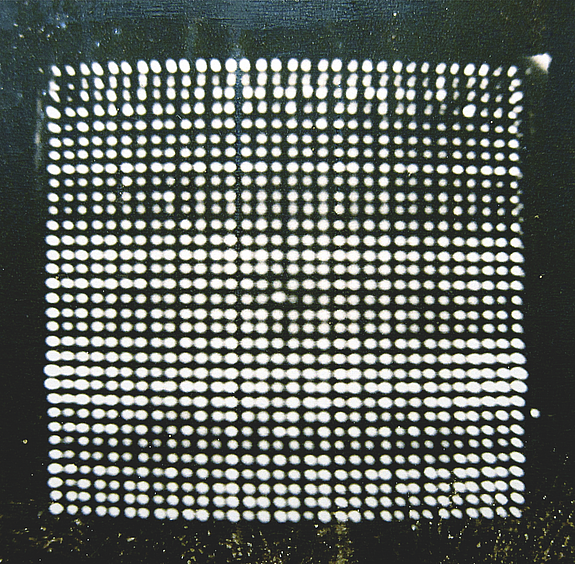

In late 1945, at the Institute for Advanced Study in Princeton, New Jersey, Hungarian-American mathematician John von Neumann gathered a small group of engineers to begin designing, building, and programming an electronic digital computer, with five kilobytes of storage, whose attention could be switched in 24 microseconds from one memory location to the next. The entire digital universe can be traced directly to this 32-by-32-by-40-bit nucleus: less memory than is allocated to displaying a single icon on a computer screen today.

Von Neumann’s project was the physical realization of Alan Turing’s Universal Machine, a theoretical construct invented in 1936. It was not the first computer. It was not even the second or third computer. It was, however, among the first computers to make full use of a high-speed random-access storage matrix, and became the machine whose coding was most widely replicated and whose logical architecture was most widely reproduced. The stored-program computer, as conceived by Alan Turing and delivered by John von Neumann, broke the distinction between numbers that mean things and numbers that do things. Our universe would never be the same.

Working outside the bounds of industry, breaking the rules of academia, and relying largely on the U.S. government for support, a dozen engineers in their twenties and thirties designed and built von Neumann’s computer for less than $1 million in under five years. “He was in the right place at the right time with the right connections with the right idea,” remembers Willis Ware, fourth to be hired to join the engineering team, “setting aside the hassle that will probably never be resolved as to whose ideas they really were.”

As World War II drew to a close, the scientists who had built the atomic bomb at Los Alamos wondered, “What’s next?” Some, including Richard Feynman, vowed never to have anything to do with nuclear weapons or military secrecy again. Others, including Edward Teller and John von Neumann, were eager to develop more advanced nuclear weapons, especially the “Super,” or hydrogen bomb. Just before dawn on the morning of July 16, 1945, the New Mexico desert was illuminated by an explosion “brighter than a thousand suns.” Eight and a half years later, an explosion one thousand times more powerful illuminated the skies over Bikini Atoll. The race to build the hydrogen bomb was accelerated by von Neumann’s desire to build a computer, and the push to build von Neumann’s computer was accelerated by the race to build a hydrogen bomb.

_____________________

In 1956, at the age of three, I was walking home with my father, physicist Freeman Dyson, from his office at the Institute for Advanced Study in Princeton, New Jersey, when I found a broken fan belt lying in the road. I asked my father what it was. “It’s a piece of the sun,” he said.

My father was a field theorist, and protégé of Hans Bethe, former wartime leader of the Theoretical Division at Los Alamos, who, when accepting his Nobel Prize for discovering the carbon cycle that fuels the stars, explained that “stars have a life cycle much like animals. They get born, they grow, they go through a definite internal development, and finally they die, to give back the material of which they are made so that new stars may live.” To an engineer, fan belts exist between the crankshaft and the water pump. To a physicist, fan belts exist, briefly, in the intervals between stars.

At the Institute for Advanced Study, more people worked on quantum mechanics than on their own cars. There was one notable exception: Julian Bigelow, who arrived at the Institute, in 1946, as John von Neumann’s chief engineer. Bigelow, who was fluent in physics, mathematics, and electronics, was also a mechanic who could explain, even to a three-year-old, how a fan belt works, why it broke, and whether it came from a Ford or a Chevrolet.

_____________________

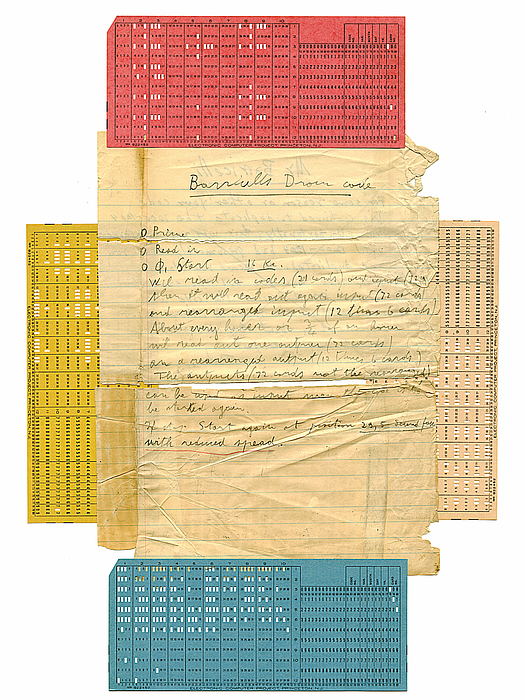

At 10:38 p.m. on March 3, 1953, in a one-story brick building at the end of Olden Lane in Princeton, New Jersey, Italian-Norwegian mathematical biologist Nils Aall Barricelli inoculated a 5-kilobyte digital universe with random numbers generated by drawing playing cards from a shuffled deck. “A series of numerical experiments are being made with the aim of verifying the possibility of an evolution similar to that of living organisms taking place in an artificially created universe,” he announced.

A digital universe—whether 5 kilobytes or the entire Internet—consists of two species of bits: differences in space, and differences in time. Digital computers translate between these two forms of information—structure and sequence—according to definite rules. Bits that are embodied as structure (varying in space, invariant across time) we perceive as memory, and bits that are embodied in sequence (varying in time, invariant across space) we perceive as code. Gates are the intersections where bits span both worlds at the moments of transition from one instant to the next.

|

|

Handwritten note and examples of punch cards related to Nils Barricelli’s work using the computer |

The term bit (the contraction, by 40 bits, of binary digit) was coined by statistician John W. Tukey shortly after he joined von Neumann’s project in November of 1945. The existence of a fundamental unit of communicable information, representing a single distinction between two alternatives, was defined rigorously by information theorist Claude Shannon in his then-secret Mathematical Theory of Cryptography of 1945, expanded into his Mathematical Theory of Communication of 1948. “Any difference that makes a difference” is how cybernetician Gregory Bateson translated Shannon’s definition into informal terms. To a digital computer, the only difference that makes a difference is the difference between a zero and a one.

_____________________

In March of 1953 there were 53 kilobytes of high-speed random-access memory on planet Earth. Five kilobytes were at the end of Olden Lane, 32 kilobytes were divided among the eight completed clones of the Institute for Advanced Study’s computer, and 16 kilobytes were unevenly distributed across a half dozen other machines. Data, and the few rudimentary programs that existed, were exchanged at the speed of punched cards and paper tape. Each island in the new archipelago constituted a universe unto itself.

In 1936, logician Alan Turing had formalized the powers (and limitations) of digital computers by giving a precise description of a class of devices (including an obedient human being) that could read, write, remember, and erase marks on an unbounded supply of tape. These “Turing machines” were able to translate, in both directions, between bits embodied as structure (in space) and bits encoded as sequences (in time). Turing then demonstrated the existence of a Universal Computing Machine that, given sufficient time, sufficient tape, and a precise description, could emulate the behavior of any other computing machine.

The results are independent of whether the instructions are executed by tennis balls or electrons, and whether the memory is stored in semiconductors or on paper tape. “Being digital should be of more interest than being electronic,” Turing pointed out.

Von Neumann set out to build a Universal Turing Machine that would operate at electronic speeds. At its core was a 32-by-32-by-40-bit matrix of high-speed random-access memory—the nucleus of all things digital ever since. “Random-access” meant that all individual memory locations—collectively constituting the machine’s internal “state of mind”—were equally accessible at any time. “High speed” meant that the memory was accessible at the speed of light, not the speed of sound. It was the removal of this constraint that unleashed the powers of Turing’s otherwise impractical Universal Machine.

_____________________

.jpg) |

|

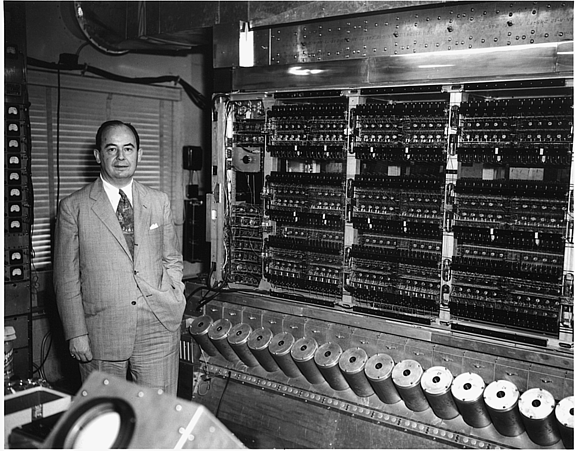

John von Neumann in front of the IAS computer, 1952. At waist level are twelve of the forty Williams cathode-ray memory tubes, storing 1,024 bits in each individual tube, for a total capacity of five kilobytes (40,960 bits). |

The IAS computer incorporated a bank of forty cathode-ray memory tubes, with memory addresses assigned as if a desk clerk were handing out similar room numbers to forty guests at a time in a forty-floor hotel. Codes proliferated within this universe by taking advantage of the architectural principle that a pair of 5-bit coordinates (25 = 32) uniquely identified one of 1,024 memory locations containing a string (or “word”) of 40 bits. In 24 microseconds, any specified 40-bit string of code could be retrieved. These 40 bits could include not only data (numbers that mean things) but also executable instructions (numbers that do things)—including instructions to modify the existing instructions, or transfer control to another location and follow new instructions from there.

Since a 10-bit order code, combined with 10 bits specifying a memory address, returned a string of 40 bits, the result was a chain reaction, analogous to the two-for-one fission of neutrons within the core of an atomic bomb. All hell broke loose as a result. Random-access memory gave the world of machines access to the powers of numbers—and gave the world of numbers access to the powers of machines.

_____________________

At the Institute for Advanced Study in early 1946, even applied mathematics was out of bounds. Mathematicians who had worked on applications during the war were expected to leave them behind. Von Neumann, however, was hooked. “When the war was over, and scientists were migrating back to their respective Universities or research institutions, Johnny returned to the Institute in Princeton,” Klári [von Neumann] recalls. “There he clearly stunned, or even horrified, some of his mathematical colleagues of the most erudite abstraction, by openly professing his great interest in other mathematical tools than the blackboard and chalk or pencil and paper.” His proposal to build an electronic computing machine under the sacred dome of the Institute was not received with applause to say the least. It wasn’t just the pure mathematicians who were disturbed by the prospect of the computer. The humanists had been holding their ground against the mathematicians as best they could, and von Neumann’s project, set to triple the budget of the School of Mathematics, was suspect on that count alone. “Mathematicians in our wing? Over my dead body! And yours?” [IAS Director Frank] Aydelotte was cabled by paleographer Elias Lowe.

Aydelotte, however, was ready to do anything to retain von Neumann, and supported the Institute’s taking an active role in experimental research. The scientists who had been sequestered at Los Alamos during the war, with an unlimited research budget and no teaching obligations, were now returning in large numbers to their positions on the East Coast. A consortium of thirteen institutions petitioned General Leslie Groves, former commander of the Manhattan Project, to establish a new nuclear research laboratory that would be the Los Alamos of the East. Aydelotte supported the proposal and even suggested building the new laboratory in the Institute Woods. “We would have an ideal location for it and I could hardly think of any place in the east that would be more convenient,” Aydelotte, en route to Palestine, cabled von Neumann from on board the Queen Elizabeth. At a meeting of the School of Mathematics called to discuss the proposal, the strongest dissenting voice was Albert Einstein, who, the minutes record, “emphasizes the dangers of secret war work” and “fears the emphasis on such projects will further ideas of ‘preventive’ wars.” Aydelotte and von Neumann hoped the computer project would get the Institute’s foot in the door for lucrative government contract work—just what Einstein feared.

Aydelotte pressed for a proposed budget, and von Neumann answered, “about $100,000 per year for three years for the construction of an all-purpose, automatic, electronic computing machine.” He argued that “it is most important that a purely scientific organization should undertake such a project,” since the government laboratories were only building devices for “definite, often very specialized purposes,” and “any industrial company, on the other hand, which undertakes such a venture would be influenced by its own past procedures and routines, and it would therefore not be able to make as fresh a start.”

_____________________

|

|

Diagram of the graphing power supply of the Electronic Computer Project, 1955 |

In September of 1930, at the Königsberg conference on the epistemology of the exact sciences, [Kurt] Gödel made the first, tentative announcement of his incompleteness results. Von Neumann immediately saw the implications, and, as he wrote to Gödel on November 30, 1930, “using the methods you employed so successfully . . . I achieved a result that seems to me to be remarkable, namely, I was able to show that the consistency of mathematics is unprovable,” only to find out, by return mail, that Gödel had got there first. “He was disappointed that he had not first discovered Gödel’s undecidability theorems,” explains [Stan] Ulam. “He was more than capable of this, had he admitted to himself the possibility that [David] Hilbert was wrong in his program. But it would have meant going against the prevailing thinking of the time.”

Von Neumann remained a vocal supporter of Gödel—whose results he recognized as applying to “all systems which permit a formalization”—and never worked on the foundations of mathematics again. “Gödel’s achievement in modern logic is singular and monumental . . . a landmark which will remain visible far in space and time,” he noted. “The result is remarkable in its quasi-paradoxical ‘self-denial’: It will never be possible to acquire with mathematical means the certainty that mathematics does not contain contradictions . . . The subject of logic will never again be the same.”

Gödel set the stage for the digital revolution, not only by redefining the powers of formal systems—and lining things up for their physical embodiment by Alan Turing—but by steering von Neumann’s interests from pure logic to applied. It was while attempting to extend Gödel’s results to a more general solution of Hilbert’s Entscheidungsproblem—the “decision problem” of whether provable statements can be distinguished from disprovable statements by strictly mechanical procedures in a finite amount of time—that Turing invented his Universal Machine. All the powers—and limits to those powers—that Gödel’s theorems assigned to formal systems also applied to Turing’s Universal Machine, including the version that von Neumann, from his office directly below Gödel’s, was now attempting to build.

Gödel assigned all expressions within the language of the given formal system unique identity numbers—or numerical addresses—forcing them into correspondence with a numerical bureaucracy from which it was impossible to escape. The Gödel numbering is based on an alphabet of primes, with an explicit coding mechanism governing translation between compound expressions and their Gödel numbers—similar to, but without the ambiguity that characterizes the translations from nucleotides to amino acids upon which protein synthesis is based. This representation of all possible concepts by numerical codes seemed to be a purely theoretical construct in 1931.

_____________________

In 1679, [Gottfried Wilhelm] Leibniz imagined a digital computer in which binary numbers were represented by spherical tokens, governed by gates under mechanical control. “This [binary] calculus could be implemented by a machine (without wheels),” he wrote, “in the following manner, easily to be sure and without effort. A container shall be provided with holes in such a way that they can be opened and closed.

They are to be open at those places that correspond to a 1 and remain closed at those that correspond to a 0. Through the opened gates small cubes or marbles are to fall into tracks, through the others nothing. It [the gate array] is to be shifted from column to column as required.”

Leibniz had invented the shift register—270 years ahead of its time. In the shift registers at the heart of the Institute for Advanced Study computer (and all processors and microprocessors since), voltage gradients and pulses of electrons have taken the place of gravity and marbles, but otherwise they operate as Leibniz envisioned in 1679. With nothing more than binary tokens, and the ability to shift right and left, it is possible to perform all the functions of arithmetic. But to do anything with that arithmetic, you have to be able to store and recall the results.

“There are two possible means for storing a particular word in the Selectron memory,” [Arthur] Burks, [Herman] Goldstine, and von Neumann explained. “One method is to store the entire word in a given tube and . . . the other method is to store in corresponding places in each of the 40 tubes one digit of the word.” This was the origin of the metaphor of handing out similar room numbers to 40 people staying in a 40-floor hotel. “To get a word from the memory in this scheme requires, then, one switching mechanism to which all 40 tubes are connected in parallel,” their “Preliminary Discussion” continues. “Such a switching scheme seems to us to be simpler than the technique needed in the serial system and is, of course, 40 times faster. The essential difference between these two systems lies in the method of performing an addition; in a parallel machine all corresponding pairs of digits are added simultaneously, whereas in a serial one these pairs are added serially in time.”

The 40 Selectron tubes constituted a 32-by-32-by-40-bit matrix containing 1,024 40-bit strings of code, with each string assigned a unique identity number, or numerical address, in a manner reminiscent of how Gödel had assigned what are now called Gödel numbers to logical statements in 1931. By manipulating the 10-bit addresses, it was possible to manipulate the underlying 40-bit strings—containing any desired combination of data, instructions, or additional addresses, all modifiable by the progress of the program being executed at the time. “This ability of the machine to modify its own orders is one of the things which makes coding the non-trivial operation which we have to view it as,” von Neumann explained to his navy sponsors in May of 1946.

“The kind of thinking that Gödel was doing, things like Gödel numbering systems—ways of getting access to codified information and such—enables you to keep track of the parcels of information as they are formed, and . . . you can then deduce certain important consequences,” says Bigelow. “I think those ideas were very well known to von Neumann [who] spent a fair amount of his time trying to do mathematical logic, and he worked on the same problem that Gödel solved.”

The logical architecture of the IAS computer, foreshadowed by Gödel, was formulated in Fuld 219.

_____________________

Using the same method of logical substitution by which a Turing machine can be instructed to interpret successively higher-level languages—or by which Gödel was able to encode metamathematical statements within ordinary arithmetic—it was possible to design Turing machines whose coded instructions addressed physical components, not memory locations, and whose output could be translated into physical objects, not just zeros and ones. “Small variations of the foregoing scheme,” von Neumann continued, “also permit us to construct automata which can reproduce themselves and, in addition, construct others.” Von Neumann compared the behavior of such automata to what, in biology, characterizes the “typical gene function, self-reproduction plus production—or stimulation of production—of certain specific enzymes.”

_____________________

Von Neumann made a deal with “the other party” in 1946. The scientists would get the computers, and the military would get the bombs. This seems to have turned out well enough so far, because, contrary to von Neumann’s expectations, it was the computers that exploded, not the bombs.

“It is possible that in later years the machine sizes will increase again, but it is not likely that 10,000 (or perhaps a few times 10,000) switching organs will be exceeded as long as the present techniques and philosophy are employed,” von Neumann predicted in 1948. “About 10,000 switching organs seem to be the proper order of magnitude for a computing machine.” The transistor had just been invented, and it would be another six years before you could buy a transistor radio—with four transistors. In 2010 you could buy a computer with a billion transistors for the inflation-adjusted cost of a transistor radio in 1956.

Von Neumann’s estimate was off by over five orders of magnitude—so far. He believed, and counseled the government and industry strategists who sought his advice, that a small number of large computers would be able to meet the demand for high-speed computing, once the impediments to remote input and output were addressed. This was true, but only for a very short time. After concentrating briefly in large, centralized computing facilities, the detonation wave that began with punched cards and vacuum tubes was propagated across a series of material and institutional boundaries: into magnetic-core memory, semiconductors, integrated circuits, and microprocessors; and from mainframes and time-sharing systems into minicomputers, microcomputers, personal computers, the branching reaches of the Internet, and now billions of embedded microprocessors and aptly named cell phones. As components grew larger in number, they grew smaller in size and cycled faster in time. The world was transformed.

Excerpted from Turing’s Cathedral: The Origins of the Digital Universe by George Dyson © 2012, reprinted by arrangement with Pantheon Books, an imprint of the Knopf Doubleday Publishing Group, a division of Random House, Inc.

Recommended Viewing: A video of a recent talk at IAS by George Dyson may be viewed at http://video.ias.edu/dyson-talk-3-12.