Apollo GPU Nodes

Access to GPU resources is restricted and requires a cluster account. To request an account please contact your school's help desk.

Hardware

The Apollo cluster contains 2xA100 nodes and 2xH200 nodes with the following configurations:

A100

A100 Apollo nodes have 8 NVIDIA A100 40GB GPUs, 2 x 64 core AMD Epyc 7742 processors, 1024 GB RAM, and 15TB of local scratch space running Springdale Linux 8.

H200

H200 Apollo nodes have 8 NVIDIA H200 141GB GPUs, NVLink, 2 x 32 core Intel Xeon-G 6430 processors, 2048 GB RAM, and 3.5 TB of local scratch space running Red Hat Enterprise Linux 8.

Configuration

All nodes mount the same /home and /data filesystems as the other computers in SNS. Scratch space locations have been tweaked to help identify local vs network resources. /scratch/lustre is the new mount point for the parallel file system and /scratch/local/ will be for any system local storage.

Scheduler

Job queuing is provided by SLURM; the following hosts have been configured as SLURM submit hosts for the Apollo nodes:

- Apollo-login1.sns.ias.edu

Access to the Apollo nodes is restricted and requires a cluster account.

Submitting / Connecting to Apollo Nodes

You can submit jobs to the Apollo nodes from apollo-login1.sns.ias.edu by requesting a gpu resource. A job submit script will automatically assign you to the appropriate queue. At this time we are enforcing a maximum of four gpus per job.

A100 Nodes

A100 GPU resources can be requested by using --gpus=1, --gres=gpu:1, or --gpus-per-node=1 :

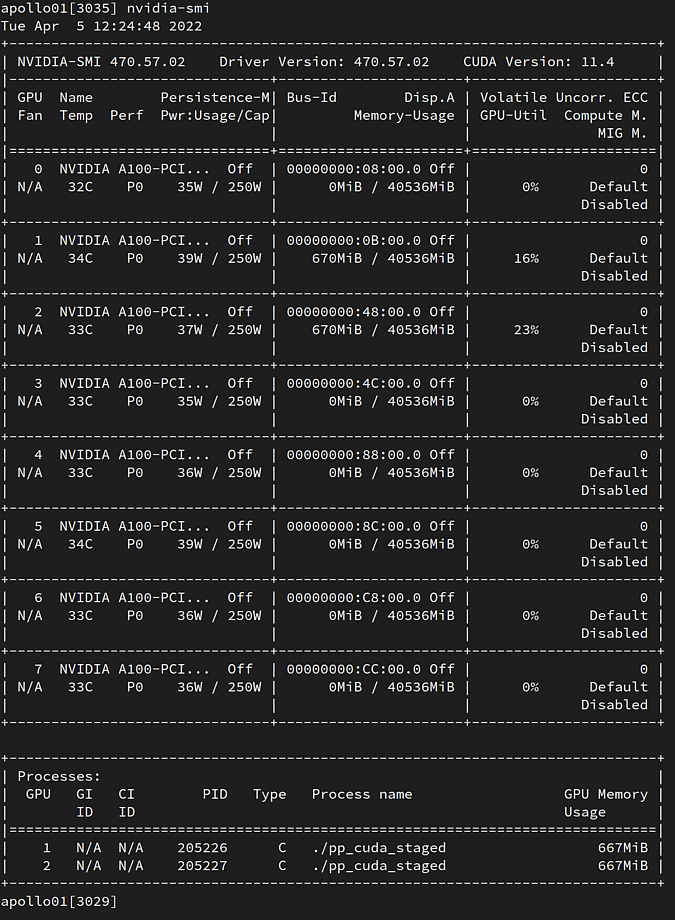

srun --time=1:00 --gpus=1 nvidia-smi

srun --time=1:00 --gres=gpu:1 nvidia-smi

srun --time=1:00 --gpus-per-node=1 nvidia-smi

H200 Nodes

H200 GPU resources are requested similarly to A100 nodes with the inclusion of the partition flag: "partition=h200"

srun --time=1:00 --gres=gpu:1 --partition=h200 nvidia-smi

srun --time=1:00 --gpus-per-node=1 --partition=h200 nvidia-smi

You can ssh to an Apollo node once you have an active job or allocation on said node:

apollo-login1$> salloc --time=5:00 --gpus=1

salloc: Granted job allocation 134

salloc: Waiting for resource configuration

salloc: Nodes apollo01 are ready for job

Qos Limits

QOS | Time Limit | GPUs per user | Jobs per user | Nodes |

|---|---|---|---|---|

gpu-short | 24 hours | 4 | 2 | A100 |

gpu-long | 72 hours | 4 | 2 | A100 |

gpu-h200 | 24 hours | 8 | 1 | H200 |

Checking GPU Usage

You can check GPU usage by using the nvidia-smi command. Please be aware that you will need to be logged in to a GPU node to run nvidia-smi or use srun to use an already allocated GPU job with srun --jobid=$<JOBID> nvidia-smi. You are able to ssh interactively to any node that you have an active job assigned.