A Connector’s Connector

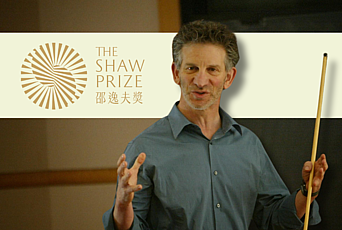

To innumerable degrees, Peter Sarnak, Professor Emeritus in the School of Mathematics, is connected. “He is extraordinarily broad and influential,” Helmut Hofer, Hermann Weyl Professor in the School, said of his colleague. “He’s a connector.” Jacob Tsimerman, a mathematician at the University of Toronto and a former Ph.D. student of Sarnak’s, agreed: “Peter is definitely one of the most connected mathematicians in the world.”

According to the Mathematics Genealogy Project, Sarnak has 57 students and 221 descendants. Another metric is his tally of 7,648 citations by 3,567 authors on MathSciNet, the American Mathematical Society’s bibliographic database.

One of those authors is the Yale computer scientist Daniel Spielman. Spielman delivered the opening lecture at “Visions in Arithmetic and Beyond: Celebrating Peter Sarnak’s Work and Impact,” a conference held at the Institute and neighboring Princeton University in June. To meet the moment, Spielman zeroed in on Sarnak’s most famous paper: “Ramanujan Graphs.” Published in 1988, the paper was co-authored with two past IAS scholars, frequent Visiting Professor in the School of Mathematics, Alexander Lubotzky, and Ralph S. Phillips, Member (1939–40, 1950–51) in the School. The “Ramanujan Graphs” paper inaugurated and introduced the namesake concept. It is Sarnak’s most cited paper, 531 hits and counting.

The import of the research is not merely due to the profound result, but also to its power in computer science and its iterative impact back in mathematics. All in all, Ramanujan graphs are maximal examples of how ideas transmit and transform along the increasingly blurry continuum of pure versus applied mathematics.

“I have often described these Ramanujan graphs as the most amazing gift that pure mathematicians have given to theoretical computer scientists,” said Spielman at the outset of his talk. He acknowledged that colleagues sometimes counter with, “‘Well, what about Fourier analysis?’ And, yeah, that’s good too. But that’s not a gift,” he said. Spielman thinks of Fourier analysis as plumbing, or paved roads— it’s part of the infrastructure; it’s a time-honored staple of the science curriculum.

Ramanujan graphs, by contrast, are a great gift because, first, “we would have never bought it for ourselves,” he said. “We wouldn’t have known where to find it! I mean, why did they call them Ramanujan graphs? Because the way they proved this is to exploit proofs of the Ramanujan conjecture, and this is not a part of what computer scientists usually learn in their education.”

Rooted in the work of Srinivasa Ramanujan (1887–1920), the namesake graphs owe their existence to clever use of proofs of Ramanujan’s conjecture. The conjecture is a deceptively simple statement about modular forms, objects in the domain of number theory. It is one of the most important conjectures ever made, articulated by Ramanujan in his 1916 paper “On Certain Arithmetic Functions.”

Notably, a half century later, the Norwegian American mathematician Atle Selberg, a 1950 Fields Medalist and Professor in the School of Mathematics (1951–2007), published a related paper “On the estimation of Fourier coefficients of modular forms”— which, indirectly, would become a crucial inspiration for “Ramanujan Graphs.” Ramanujan graphs live in the domain of combinatorics: the art of counting, among the oldest subjects in mathematics. In this domain, graphs are all about enumerating connections. A graph is a collection of points and lines, a set of vertices and a set of edges. “Any two vertices are either joined, or they’re not,” Sarnak said. The edges connect a subset of vertices, and thereby represent a relation. “You have a set of objects, and you have some relations, and you want to prove that those relations are enough to force the existence of some other set.” When every vertex has the same number of edges, and thus is connected to the same number of neighbors—that number being “d”—then the graph is said to be “d regular.” The first interesting case is d=3. Sarnak added: “Also, d=3 leads to the sparsest graphs, so indeed may be the most interesting case.”

The world wide web can be modeled as a graph: a vertex represents a website, and a line indicates a hyperlink. Google’s search algorithm, the PageRank algorithm, is based on this kind of graph theory. “You can imagine that many engineering problems, many complexity problems, involve understanding some properties of these graphs,” said Sarnak. When he lectures on Ramanujan graphs, Sarnak begins by spelling out the concept in simple enough terms with his signature handwritten slides:

Sparsity and high-connectedness are properties that pertain more generally to a larger family of graphs: expander graphs. Expander graphs extend and augment —“expand”—the representation of information or network connectivity in an efficient and robust way. The sparsity property means that each vertex is only connected to a small number of other vertices; the number of edges, or connections, is much smaller than the maximum possible. Despite the sparsity, the highly connected property means there are many short paths between any two vertices in the graph.

The sparsity-connected interplay and tension facilitates large graphs, making them scalable; and it makes the graphs especially useful because information or signals propagate quickly, and data is efficiently processed and stored.

“The existence of expanders is counterintuitive,” said Sarnak. “Very well-known mathematicians made conjectures which, once pruned down and understood, were saying essentially, ‘Expanders don’t exist.’” And by extension, the existence of Ramanujan graphs is even more counterintuitive: because Ramanujan graphs are a special class of expander graphs that possess optimal sparsity and connectivity. “They are the best possible,” Sarnak said. “You cannot do better. That’s why they’re interesting. And there’s a tension between sparse and highly connected. That’s what makes them useful in all sorts of applications.”

Antecedents of expanders—before they were so named—extend from various directions. By 1956, John von Neumann, Professor (1933–55) in the School of Mathematics, had composed the rudiments of an expander while investigating fault tolerant circuits. He described this work in the paper, “Probabilistic logics and the synthesis of reliable organisms from unreliable components.”

In his talk, Spielman reckoned that future developments stem from von Neumann. “I suspect that people studying von Neumann’s paper is one of the main ways we got the notion of expansion,” said Spielman. “People had read his paper and knew about his paper all around the world.”

In 1967, in Russia, Andrey Kolmogorov, among the greatest Russian mathematicians of the twentieth century, published a related paper with Y. M. Barzdin, “On the Realization of Networks in Three-Dimensional Space.” By way of example at the outset, they mentioned not only logic networks but also neuron networks—they asked: How many neurons and synapses can fit in a brain? “In the brain, you have a lot of vertices, but a fixed volume,” said Alexander Gamburd, a mathematician at The Graduate Center, CUNY; Member in the School of Mathematics (2005–06, 2007–08, 2022); and a longtime collaborator of Sarnak’s. “You clearly want the vertices, or neurons, to be highly connected, but there is a sparsity constraint.”

“The necessity of sparsity could be seen in the case of the neural network in the brain,” said Gamburd. “Identifying synapses with the vertices and dendrites with the edges, one can view the network of neurons in the brain as a graph; since the ‘wires’ have finite thickness, their total length cannot exceed the quotient of the average volume of one’s head and the area of the wires cross-section.” Bardzin’s contribution was to confirm Kolmogorov’s calculation about a neural net under such constraints. It was a crude model of the brain, but in the course of verifying this result, Barzdin constructed the equivalent of an expander.

It wasn’t until 1973 that expander graphs were formally defined, and named, by the Russian mathematician Mark Pinsker with the paper, “On the complexity of a concentrator.” Pinsker, who worked in information theory, probability theory, and coding theory, used a probabilistic argument to prove the existence of expanders. And indeed, it seemed that expanders could only be generated randomly. Any deliberate, rational attempt to make one produced a graph that did not possess the coveted properties.

However, Pinsker, in his paper, referred to work (yet unpublished; appearing later in 1973) by his countryman, mathematician Gregory Margulis, Member (1991, 2006) in the School of Mathematics. Margulis’s feat was to construct an explicit expander— that is, to construct a deterministic graph according to a mathematical formula that explicitly sets out which vertices and edges should be connected. As a result, explicit expander graphs could be designed for implementation in any application —in computer science, coding theory cryptography, and other fields that require highly connected, sparse, scalable, and robust graph structures.

The construction of explicit expanders thereafter became something of an industry.

Sarnak arrived on the mathematical scene in the mid1970s. He did his Ph.D. in mathematics at Stanford with Paul Cohen, Member (1959–61, 1967) in the School of Mathematics. Cohen had revolutionized set theory by solving the first of David Hilbert’s problems from his 1900 list. This work earned Cohen a Fields Medal in 1966, still the only instance of the award for mathematical logic.

Sarnak had intended to pursue logic with Cohen, but when he arrived at Stanford in 1976, his advisor had moved on—he had redirected his attention toward another of Hilbert’s problems: the Riemann hypothesis. Posed by German mathematician Bernhard Riemann in 1859, the hypothesis addresses the distribution of prime numbers, and in so doing asserts that some nontrivial zeros— zeros of the Riemann zeta function— lie on a critical line. Sarnak happily joined Cohen in bouncing around ideas. Cohen suspected that related work by Selberg might be useful. “So, I had the privilege of learning Selberg’s work as an equal of Cohen,” said Sarnak.

In 1984, after a few years at NYU’s Courant Institute, Sarnak took up the position of associate professor at Stanford, where he commenced a series of fruitful collaborations with Ralph S. Phillips. One line of investigation they probed was Selberg’s compelling contribution, not to the Riemann hypothesis, but rather to the Ramanujan conjecture. And in fact, by this time the Ramanujan conjecture, singular, had evolved into the Ramanujan conjectures, plural. The idea proved so compelling that over the years it was reinterpreted— propagated and transformed into various potent reformulations.

The two problems—the Riemann hypothesis and the Ramanujan conjecture, although distinct and dissimilar problems, are not entirely unrelated. The connection comes via those nontrivial zeroes. Selberg had provided a generalization of the Ramanujan conjecture—and Robert Langlands, Professor Emeritus in the School of Mathematics, took it even further. Selberg’s general case became known as the Ramanujan-Selberg conjecture. It remains unsolved, but the best results to date are due to Sarnak and collaborators.

Over the course of their Ramanujan investigations, Sarnak and Phillips became aware, tangentially, of an “order,” of sorts, issued by the combinatorist Noga Alon, frequent Member and Visiting Professor in the School of Mathematics, now at Princeton, and the computer scientist Ravi Boppana, at the Massachusetts Institute of Technology. They had an inkling that their current line of thinking might be relevant.

In a 1986 paper, Alon and Boppana showed that there was a limit to how good an expander graph could be. Measuring a graph’s connectivity and expansion properties had become a going and growing concern. The precursors here date back to the early 1970s in the domain of differential geometry, and courtesy of Jeff Cheeger, Member in the School of Mathematics (1972, 1977–78, 1995), now at the Courant Institute. To describe it using technical terms of the art, Cheeger’s contribution was to establish very useful inequalities between an isoperimetric constant h—the Cheeger constant, which is difficult to compute—and the spectrum of the Laplacian. The upshot: an analogue of these entities was developed for, and became central in the study of, expander graphs. And the bottom line: “You want h to be big,” said Sarnak. “That h should be not small implies zillions and zillions of beautiful properties, and one property is there should be no bottlenecks, like a traffic jam. If you’re trying to make an efficient network, you don’t want a bottleneck.”

In an ideal world, there would be no limit on the beautiful properties of an expander graph. Alon and Boppana, however, showed, by a reformulation of the measure of a graph’s structure and expansion properties—the so-called spectral gap measure, which emerged from the domain of linear algebra—that an expander graph could not exceed a certain threshold. There was an upper bound. “You want this gap to be as big as possible,” said Sarnak. “The bigger the gap, the better: the more of an expander your graph will be.”

Alon and Boppana, in delineating the upper bound, put out a call for such an optimal expander graph.

Sarnak and his collaborators delivered.

He and Phillips got a start and made some progress. Significant traction on the optimal property came when Sarnak ran it by his friend Lubotzky, then also at Stanford: given the linear algebra reformulation of the graph’s expansion measure, Lubotzky suggested that they explore some seemingly apropos methods in the domain of number theory. “The graphs we made were built out of number theory,” said Sarnak.

The mathematicians exploited a series of results on the namesake Ramanujan conjecture and its reformulations. All they needed in order to construct the graphs were the simplest cases of the Ramanujan conjecture due to the German number theorist Martin Eichler, Member in the School of Mathematics (1964–65). The proof of the original Ramanujan conjecture was due to the Institute’s Pierre Deligne, Professor Emeritus in the School of Mathematics. Deligne accomplished this feat through deploying fancy machinery by the German-born French mathematician Alexander Grothendieck, who was for a time stateless. With this work Deligne won the Fields Medal. Rallying these more sophisticated resources, when all was said and done, Lubotzky, Phillips, and Sarnak were able to verify the existence of an infinite family of optimal expander graphs—the best possible expanders: Ramanujan graphs.

The construction was entirely explicit—the graphs essentially came with a blueprint for application. “You can implement it instantly,” said Sarnak. “I give you the vertices,” he explained—the names may be numbers, or other mathematical terms of reference. “And I tell you, for example, ‘Connect vertex five to vertex seven.’ I give you the best configuration with a formula.”

In 1988, the researchers published their result in the journal Combinatorica. Independently, from behind the Iron Curtain, Margulis delivered the same result, using the same Ramanujan input and a similar construction. He published it the same year and eventually smuggled out a copy to Sarnak via a mutual connection at Stanford.

These days, Lubotzky gets questioned by the younger generation as to why the authors didn’t publish their momentous paper in a top journal like the Annals or Inventiones. “Combinatorica is a great niche journal,” he said. “We didn’t realize the wider import at the time.”

“In computer science, this was sought after,” said Sarnak. “But more so than we realized. It became a hit.” And it is still very much a going concern.

Sarnak has a bet with Noga Alon about the chances that any regular expander graph generated at random is Ramanujan, i.e., optimal. Alon says that nearly all regular random graphs are Ramanujan; Sarnak says that almost none are. Progress on this front was outlined at the Sarnakfest by the physicist Horng-Tzer Yau of Harvard University, a frequent Member in the School of Mathematics, who lectured on “Spectral Statistics of Random Regular Graphs”— work which he conducted jointly with Jiaoyang Huang, Member in the School (2019–20), and Theo McKenzie. Given what he heard, Sarnak expects a resolution soon. “They are very close to proving that these obey a basic random matrix law,” he said.

“It turns out we are both going to lose,” said Sarnak. He expanded: “Noga said with probability one that the graph is Ramanujan. I say with probability one that the graph is not Ramanujan. It’s going to be that with probability 51% the graph is bipartite Ramanujan, and with probability 27% it is nonbipartite Ramanujan—in the former case he has to pay me, and in the latter case, I have to pay him. We are both wrong, officially.”

More tangibly, applications of Ramanujan graphs —a.k.a. L.P.S. Ramanujan graphs, or L.P.S. graphs—abounded back in the day and continue to proliferate: in algorithm design, data storage, and cryptography. And in quantum information theory, quantum expander graphs scramble information.

In 2006, Avi Wigderson, Herbert H. Maass Professor in the School of Mathematics, published a 123-page survey, “Expander Graphs and Their Applications,” with co-authors Shlomo Hoory and Nathan Linial. The authors wrote, “It is not surprising that expanders are useful in the design and analysis of communication networks. What is less obvious is that expanders have surprising utility in other computational settings such as in the theory of error correcting codes and the theory of pseudorandomness… they play a key role in the study of Monte-Carlo algorithms in statistical mechanics.” They added, “The list of such interesting and fruitful connections goes on and on with so many applications we will not even be able to mention.” Wigderson estimated that an updated survey today would be triple the size.

Because Wigderson chronicled the abundant utility of expanders, Sarnak calls Wigderson “the expander man.” Wigderson’s survey also includes his own “zig-zag product,” devised with Visitors in the School of Mathematics Omer Reingold (1999–2003) and Salil Vadhan (2000–01). The zig-zag provided a new construction—the first combinatorial (nonalgebraic) construction—of explicit expanders that were much easier to understand, and arguably more flexible. Yet, at once, the zig-zag expanders were more difficult to construct and non-optimal. “The originals were not optimal, but later constructions made them near optimal,” Wigderson said. At any rate, Wigderson doesn’t see the point of evaluating trade-offs or pros and cons. “Math benefits from having different solutions of different natures to the same problem as they reveal connections between fields,” he said, “and sometimes different properties are useful for different applications.”

Wigderson’s epic survey also gathers in Daniel Spielman’s early work, which in part motivated his invitation as the opening speaker at Sarnakfest.

More recent results—with collaborators Adam Marcus, von Neumann Fellow in the School of Mathematics (2016–17), and his former Ph.D. student Nikhil Srivastava, Member (2010–11) and Visitor (2012) in the School, now at UC Berkeley and the Simons Institute for the Theory of Computing—have refined and advanced the construction of Ramanujan graphs, and extended their applications. Notably, one result managed to construct a Ramanujan graph without using number theory.

“Computer scientists didn't like the fact that our proof used such fancy number theory stuff,” said Sarnak. “They wanted to find their own way of understanding.”

But for thirty years, nobody matched it. Spielman and collaborators managed an existence proof— although their constructions were not explicit. In that sense, they did not quite match the Lubotzky-Phillips -Sarnak miracle. But in another sense, as Sarnak pointed out, “They did something better: they do so for all degrees, d≥3.”

The quantum realm, a space filled with challenges, also makes use of Ramanujan graphs—and notably ones that have migrated into higher dimensions and evolved into Ramanujan complexes, along with other high-dimensional expanders (or “HDXs”). Here, Lubotzky led the way, while Spielman enjoyed being a spectator. “Admittedly, it wasn’t clear to me that this was going to be useful, but it totally was. It recently brought us amazing advances in quantum locally testable codes.”

Another leader in this domain is Irit Dveer Dinur, a theoretical computer scientist who joined the Faculty of the School of Mathematics in August 2024. Ramanujan complexes, the higher dimensional analogue of Ramanujan graphs, are “miraculous,” Dinur said. “The construction feels like something that really shouldn’t exist. And if you see something that shouldn’t exist, and you have intuition why it shouldn’t exist, but it does exist, then it is a very powerful thing. It allows you to do things that are almost impossible.”

For a long time, Dinur’s domain was stuck: The technical pursuit, she explained, was “to construct codes and even more sophisticated objects called PCPs, a type of robustified proof, that are both sparse and locally testable, which requires a lot of redundancy”—redundancy is a kind of connectedness, but on a higher level. “Ramanujan complexes and other higher dimensional expanders give you sparsity, and also super redundancy,” Dinur said. “And that’s what allowed people to construct these quantum locally testable codes.” These constructions were not based on Ramanujan complexes, Dinur clarified, but they are part of the theory. “They are based on high-dimensional expansion, which is a property of Ramanujan complexes. Once you study Ramanujan complexes, and understand this property, you can take it aside and modify it in a way that gives you these quantum codes.”

Wrapping up his lecture at the Sarnak conference, Spielman called this quantum line of investigation “one of the more surprisingly successful intellectual endeavors of the last decade in mathematics and computer science.” He said, “Catch me over tea if you want to hear me rave about that.”

During the Q&A that followed the lecture, Sarnak offered more of a retrospective rave. He described the link between Ramanujan graph constructions that looped in an episode in Institute history. He recounted how Spielman and company’s proof uses—as a critical input that does heavy lifting—the Lee-Yang theorem, by the physicists Tsung-Dao Lee and Chen-Ning Yang, later reformulated by Elliott Lieb, Visitor in the School of Natural Sciences, and Olav Heilmann.

Lee and Yang both served as Faculty in the School in the 1950s while proving that theorem. “It’s not such a great contribution,” Yang once commented, “but I fondly consider it as a minor gem.” (For their work on chirality around the same time, Lee and Yang won the 1957 Nobel Prize in Physics.) While at the Institute, they conversed with von Neumann and Selberg, and they drew directly from the mathematician George Pólya’s attempts at the Riemann hypothesis.

Notably, Sarnak said, the Spielmanet-al proof uses the Lee-Yang theorem —“a theorem which ensures that certain polynomials have all their zeros on a circle.” And similarly, he said, the Lubotzky-Phillips-Sarnak proof uses a proof by the visionary André Weil, Professor in the School of Mathematics (1958–98), “which is known as the ‘Riemann Hypothesis for curves over finite fields’ and asserts that related polynomials have their zeros on a circle.”

Where Sarnak and company had used a number theory theorem, Spielman and company used a statistical physics theorem: “So Lee-Yang is replacing Weil,” said Sarnak. “Both those theorems have the same flavor.”

“Oooh, that I didn’t know,” said Spielman. “Great connection.”

Correction: January 24, 2025

An earlier version of this article referred to Alexander Grothendieck as a German mathematician. Though born in Germany, Grothendieck was for a long time stateless, and spent his life in France.